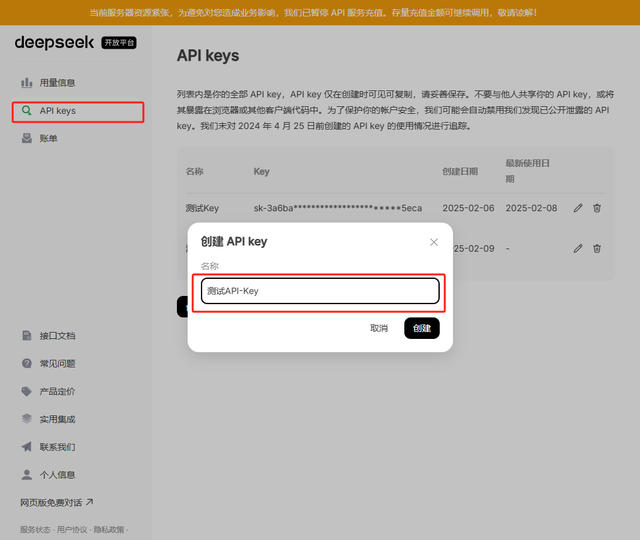

创建 API Keys。填入API-KEY名称后会生成一个API Key,这个API请务必保存好,因为无法通过管理界面再次查看

创建 API Keys。填入API-KEY名称后会生成一个API Key,这个API请务必保存好,因为无法通过管理界面再次查看 查看用量信息

查看用量信息

当前deepseek平台充值功能已暂停,但是官方会赠送10 CNY的体验金额,如果一个帐号下体验金额用完后,可按上述操作换帐号重新申请API-KEY

首次调用API的Python示例安装依赖pip3 install openaiPython源码from openai import OpenAIclient = OpenAI(api_key="<DeepSeek API Key>", base_url="https://api.deepseek.com")response = client.chat.completions.create( model="deepseek-chat", messages=[ {"role": "system", "content": "You are a helpful assistant"}, {"role": "user", "content": "帮我整理下deepseek的特点和优势是什么?"}, ], stream=False)print(response.choices[0].message.content)执行结果图示 说明:代码中 <DeepSeek API Key> 替换成自己的 API-KEY使用的是python 3.11.5 版本deepseek 模型与价格

说明:代码中 <DeepSeek API Key> 替换成自己的 API-KEY使用的是python 3.11.5 版本deepseek 模型与价格 deepseek-chat 模型最新版本为 DeepSeek-V3【默认启用】deepseek-reasoner 模型最新版本为 DeepSeek-R1【勾选深度思考后启用】deepseek-reasoner 是deepseek思维链,会给出正式回答之前的思考过程。在调用API时默认最大输出长度为4K,可以使用max_tokens进行调整,以支持更长的输出

deepseek-chat 模型最新版本为 DeepSeek-V3【默认启用】deepseek-reasoner 模型最新版本为 DeepSeek-R1【勾选深度思考后启用】deepseek-reasoner 是deepseek思维链,会给出正式回答之前的思考过程。在调用API时默认最大输出长度为4K,可以使用max_tokens进行调整,以支持更长的输出 DeepSeek API调用常见错误码

DeepSeek API调用常见错误码 Temperature 设置

Temperature 设置场景

温度

代码生成/数学解题

0.0

数据抽取/分析

1.0

通用对话

1.3

翻译

1.3

创意类写作/诗歌创作

1.5

deepseek 四种消息格式System message参数名称

数据类型

说明

content

string

消息内容

role

string

该消息的发起角色,其值为system

name

string

参与者名称,为模型提供信息以区分相同角色的参与者,可选参数

User message参数名称

数据类型

说明

content

string

消息内容

role

string

该消息的发起角色,其值为user

name

string

参与者名称,为模型提供信息以区分相同角色的参与者,可选参数

Assistant message参数名称

数据类型

说明

content

string

消息内容

role

string

该消息的发起角色,其值为assistant

name

string

参与者名称,为模型提供信息以区分相同角色的参与者,可选参数

prefix

bool

当设置该参数值为true时,来强制模型在其回答中以此 assistant 消息中提供的前缀内容开始,要使用该功能,base_url 必须设置为 https://api.deepseek.com/beta

reasoning_content

string

用于deepseek-reasoner模型在对话前缀续写功能下,作为最后一条 assistant 思维链内容的输入,使用此功能时,prefix 参数必须设置为 true

Tool message参数名称

数据类型

说明

content

string

消息内容

role

string

该消息的发起角色,其值为tool

tool_call_id

string

此消息所响应的tool call 的ID

deepseek API 返回值说明参数名称

数据类型

说明

id

string

该对话的唯一标识符

choices

object[]

模型生成的 completion 的选择列表

finish_reason string 模型停止生成的原因stop:模型自然停止生成,或遇到stop序列中的字符串length:输出长度达到了模型上下文长度限制,或者达到了max_tokens的限制content_filter:输出内容因触发过滤策略而被过滤insufficient_system_resource:系统推理资源不足,生成被打断index integer 该completion在模型生成的completion的选择列表中的索引message 模型生成的completion消息content: 该completion的内容

reasoning_content: 仅适用于 deepseek-reasoner模型,内容为assistant消息中在最终答案之前的推理内容

tool_calls:模型生成的tool调用,例如function调用

role:角色logprobs:生成这条消息的角色created

integer

创建聊天完成时的Unix时间戳(以秒为单位)

model

string

生成该completion的模型名

object

string

对象的类型,其值为 chat.completion

usage

object

该对话补全请求的用量信息

completion_tokens:模型 completion 产生的 token 数prompt_tokens:用户 prompt 所包含的 token 数,该值等于 prompt_cache_hit_tokens + prompt_cache_miss_tokensprompt_cache_hit_tokens:用户 prompt 中,命中上下文缓存的token数prompt_cache_miss_tokens:用户prompt中,末命中上下文缓存的token数total_tokens:该请求中,所有token的数量(prompt + completion)completion_tokens_details:completion tokens 的详细信息Python 调用对话API的三种方式OpenAI SDK源码示例from openai import OpenAI# for backward compatibility, you can still use `https://api.deepseek.com/v1` as `base_url`.client = OpenAI(api_key="<your API key>", base_url="https://api.deepseek.com")response = client.chat.completions.create( model="deepseek-chat", messages=[ {"role": "system", "content": "You are a helpful assistant"}, {"role": "user", "content": "帮我整理下deepseek的特点和优势是什么?"}, ], max_tokens=1024, temperature=0.7, stream=False)print(response.choices[0].message.content)结果截图 Requests源码示例import requestsimport jsonurl = "https://api.deepseek.com/chat/completions"payload = json.dumps({ "messages": [ { "content": "You are a helpful assistant", "role": "system" }, { "content": "帮我整理下deepseek的特点和优势是什么?", "role": "user" } ], "model": "deepseek-chat", "frequency_penalty": 0, "max_tokens": 2048, "presence_penalty": 0, "response_format": { "type": "text" }, "stop": None, "stream": False, "stream_options": None, "temperature": 1, "top_p": 1, "tools": None, "tool_choice": "none", "logprobs": False, "top_logprobs": None})headers = { 'Content-Type': 'application/json', 'Accept': 'application/json', 'Authorization': 'Bearer <your API key>'}response = requests.request("POST", url, headers=headers, data=payload)print(response.text)结果截图

Requests源码示例import requestsimport jsonurl = "https://api.deepseek.com/chat/completions"payload = json.dumps({ "messages": [ { "content": "You are a helpful assistant", "role": "system" }, { "content": "帮我整理下deepseek的特点和优势是什么?", "role": "user" } ], "model": "deepseek-chat", "frequency_penalty": 0, "max_tokens": 2048, "presence_penalty": 0, "response_format": { "type": "text" }, "stop": None, "stream": False, "stream_options": None, "temperature": 1, "top_p": 1, "tools": None, "tool_choice": "none", "logprobs": False, "top_logprobs": None})headers = { 'Content-Type': 'application/json', 'Accept': 'application/json', 'Authorization': 'Bearer <your API key>'}response = requests.request("POST", url, headers=headers, data=payload)print(response.text)结果截图 Httpclient源码示例import http.clientimport jsonconn = http.client.HTTPSConnection("api.deepseek.com")payload = json.dumps({ "messages": [ { "content": "You are a helpful assistant", "role": "system" }, { "content": "帮我整理下deepseek的特点和优势是什么?", "role": "user" } ], "model": "deepseek-chat", "frequency_penalty": 0, "max_tokens": 2048, "presence_penalty": 0, "response_format": { "type": "text" }, "stop": None, "stream": False, "stream_options": None, "temperature": 1, "top_p": 1, "tools": None, "tool_choice": "none", "logprobs": False, "top_logprobs": None})headers = { 'Content-Type': 'application/json', 'Accept': 'application/json', 'Authorization': 'Bearer <your API key>'}conn.request("POST", "/chat/completions", payload, headers)res = conn.getresponse()data = res.read()print(data.decode("utf-8"))结果截图

Httpclient源码示例import http.clientimport jsonconn = http.client.HTTPSConnection("api.deepseek.com")payload = json.dumps({ "messages": [ { "content": "You are a helpful assistant", "role": "system" }, { "content": "帮我整理下deepseek的特点和优势是什么?", "role": "user" } ], "model": "deepseek-chat", "frequency_penalty": 0, "max_tokens": 2048, "presence_penalty": 0, "response_format": { "type": "text" }, "stop": None, "stream": False, "stream_options": None, "temperature": 1, "top_p": 1, "tools": None, "tool_choice": "none", "logprobs": False, "top_logprobs": None})headers = { 'Content-Type': 'application/json', 'Accept': 'application/json', 'Authorization': 'Bearer <your API key>'}conn.request("POST", "/chat/completions", payload, headers)res = conn.getresponse()data = res.read()print(data.decode("utf-8"))结果截图 Python 调用 FIM 补全 的三种示例OpenAI SDK源码示例from openai import OpenAIclient = OpenAI( api_key="<your API key>", base_url="https://api.deepseek.com/beta",)response = client.completions.create( model="deepseek-chat", prompt="def fib(a):", suffix=" return fib(a-1) + fib(a-2)", max_tokens=128)print(response.choices[0].text)结果截图

Python 调用 FIM 补全 的三种示例OpenAI SDK源码示例from openai import OpenAIclient = OpenAI( api_key="<your API key>", base_url="https://api.deepseek.com/beta",)response = client.completions.create( model="deepseek-chat", prompt="def fib(a):", suffix=" return fib(a-1) + fib(a-2)", max_tokens=128)print(response.choices[0].text)结果截图 Requests源码示例import requestsimport jsonurl = "https://api.deepseek.com/beta/completions"payload = json.dumps({ "model": "deepseek-chat", "prompt": "def fib(a):", "echo": False, "frequency_penalty": 0, "logprobs": 0, "max_tokens": 128, "presence_penalty": 0, "stop": None, "stream": False, "stream_options": None, "suffix": " return fib(a-1) + fib(a-2)", "temperature": 1, "top_p": 1})headers = { 'Content-Type': 'application/json', 'Accept': 'application/json', 'Authorization': 'Bearer <TOKEN>'}response = requests.request("POST", url, headers=headers, data=payload)print(response.text)结果截图

Requests源码示例import requestsimport jsonurl = "https://api.deepseek.com/beta/completions"payload = json.dumps({ "model": "deepseek-chat", "prompt": "def fib(a):", "echo": False, "frequency_penalty": 0, "logprobs": 0, "max_tokens": 128, "presence_penalty": 0, "stop": None, "stream": False, "stream_options": None, "suffix": " return fib(a-1) + fib(a-2)", "temperature": 1, "top_p": 1})headers = { 'Content-Type': 'application/json', 'Accept': 'application/json', 'Authorization': 'Bearer <TOKEN>'}response = requests.request("POST", url, headers=headers, data=payload)print(response.text)结果截图 Httpclient源码示例import http.clientimport jsonconn = http.client.HTTPSConnection("api.deepseek.com")payload = json.dumps({ "model": "deepseek-chat", "prompt": "def fib(a):", "echo": False, "frequency_penalty": 0, "logprobs": 0, "max_tokens": 128, "presence_penalty": 0, "stop": None, "stream": False, "stream_options": None, "suffix": " return fib(a-1) + fib(a-2)", "temperature": 1, "top_p": 1})headers = { 'Content-Type': 'application/json', 'Accept': 'application/json', 'Authorization': 'Bearer <your API key>'}conn.request("POST", "/beta/completions", payload, headers)res = conn.getresponse()data = res.read()print(data.decode("utf-8"))结果截图

Httpclient源码示例import http.clientimport jsonconn = http.client.HTTPSConnection("api.deepseek.com")payload = json.dumps({ "model": "deepseek-chat", "prompt": "def fib(a):", "echo": False, "frequency_penalty": 0, "logprobs": 0, "max_tokens": 128, "presence_penalty": 0, "stop": None, "stream": False, "stream_options": None, "suffix": " return fib(a-1) + fib(a-2)", "temperature": 1, "top_p": 1})headers = { 'Content-Type': 'application/json', 'Accept': 'application/json', 'Authorization': 'Bearer <your API key>'}conn.request("POST", "/beta/completions", payload, headers)res = conn.getresponse()data = res.read()print(data.decode("utf-8"))结果截图 Python 调用获取模型API的三种方式OpenAI源码示例from openai import OpenAI# for backward compatibility, you can still use `https://api.deepseek.com/v1` as `base_url`.client = OpenAI(api_key="<your API key>", base_url="https://api.deepseek.com")print(client.models.list())结果截图

Python 调用获取模型API的三种方式OpenAI源码示例from openai import OpenAI# for backward compatibility, you can still use `https://api.deepseek.com/v1` as `base_url`.client = OpenAI(api_key="<your API key>", base_url="https://api.deepseek.com")print(client.models.list())结果截图 Requests源码示例import requestsurl = "https://api.deepseek.com/models"payload = {}headers = { 'Accept': 'application/json', 'Authorization': 'Bearer <TOKEN>'}response = requests.request("GET", url, headers=headers, data=payload)print(response.text)结果截图

Requests源码示例import requestsurl = "https://api.deepseek.com/models"payload = {}headers = { 'Accept': 'application/json', 'Authorization': 'Bearer <TOKEN>'}response = requests.request("GET", url, headers=headers, data=payload)print(response.text)结果截图 Httpclient源码示例import http.clientconn = http.client.HTTPSConnection("api.deepseek.com")payload = ''headers = { 'Accept': 'application/json', 'Authorization': 'Bearer <your API key>'}conn.request("GET", "/models", payload, headers)res = conn.getresponse()data = res.read()print(data.decode("utf-8"))结果截图

Httpclient源码示例import http.clientconn = http.client.HTTPSConnection("api.deepseek.com")payload = ''headers = { 'Accept': 'application/json', 'Authorization': 'Bearer <your API key>'}conn.request("GET", "/models", payload, headers)res = conn.getresponse()data = res.read()print(data.decode("utf-8"))结果截图 Python 调用查询帐户余额的两种方式Requests源码示例import requestsurl = "https://api.deepseek.com/user/balance"payload = {}headers = { 'Accept': 'application/json', 'Authorization': 'Bearer <your API key>'}response = requests.request("GET", url, headers=headers, data=payload)print(response.text)结果截图

Python 调用查询帐户余额的两种方式Requests源码示例import requestsurl = "https://api.deepseek.com/user/balance"payload = {}headers = { 'Accept': 'application/json', 'Authorization': 'Bearer <your API key>'}response = requests.request("GET", url, headers=headers, data=payload)print(response.text)结果截图 Httpclient源码示例import http.clientconn = http.client.HTTPSConnection("api.deepseek.com")payload = ''headers = { 'Accept': 'application/json', 'Authorization': 'Bearer <your API key>'}conn.request("GET", "/user/balance", payload, headers)res = conn.getresponse()data = res.read()print(data.decode("utf-8"))结果截图

Httpclient源码示例import http.clientconn = http.client.HTTPSConnection("api.deepseek.com")payload = ''headers = { 'Accept': 'application/json', 'Authorization': 'Bearer <your API key>'}conn.request("GET", "/user/balance", payload, headers)res = conn.getresponse()data = res.read()print(data.decode("utf-8"))结果截图 推理模型的流式和非流式示例deepseek-reasoner 是DeepSeek 推出的推理模型。在输出最终结果之前,模型会先输出一段思维链内容,以提升最终答案的准确性。在使用deepseek-reasoner时,需要先将openai升级到最新pip install -U openai如果在输入的messages序列中,传入了reasoning_content, API会返回 400 错误, 因此需要删除 reasoning_content字段Python 非流式调用示例源码示例from openai import OpenAIclient = OpenAI(api_key="<DeepSeek API Key>", base_url="https://api.deepseek.com")# Round 1messages = [{"role": "user", "content": "9.11 and 9.8, which is greater?"}]response = client.chat.completions.create( model="deepseek-reasoner", messages=messages)reasoning_content = response.choices[0].message.reasoning_contentcontent = response.choices[0].message.contentprint(f"Round1 content: {content}")# Round 2messages.append({'role': 'assistant', 'content': content})messages.append({'role': 'user', 'content': "How many Rs are there in the word 'strawberry'?"})response = client.chat.completions.create( model="deepseek-reasoner", messages=messages)reasoning_content = response.choices[0].message.reasoning_contentcontent = response.choices[0].message.contentprint(f"Round2 content: {content}")# ...结果载图

推理模型的流式和非流式示例deepseek-reasoner 是DeepSeek 推出的推理模型。在输出最终结果之前,模型会先输出一段思维链内容,以提升最终答案的准确性。在使用deepseek-reasoner时,需要先将openai升级到最新pip install -U openai如果在输入的messages序列中,传入了reasoning_content, API会返回 400 错误, 因此需要删除 reasoning_content字段Python 非流式调用示例源码示例from openai import OpenAIclient = OpenAI(api_key="<DeepSeek API Key>", base_url="https://api.deepseek.com")# Round 1messages = [{"role": "user", "content": "9.11 and 9.8, which is greater?"}]response = client.chat.completions.create( model="deepseek-reasoner", messages=messages)reasoning_content = response.choices[0].message.reasoning_contentcontent = response.choices[0].message.contentprint(f"Round1 content: {content}")# Round 2messages.append({'role': 'assistant', 'content': content})messages.append({'role': 'user', 'content': "How many Rs are there in the word 'strawberry'?"})response = client.chat.completions.create( model="deepseek-reasoner", messages=messages)reasoning_content = response.choices[0].message.reasoning_contentcontent = response.choices[0].message.contentprint(f"Round2 content: {content}")# ...结果载图 Python 流式调用示例源码示例from openai import OpenAIclient = OpenAI(api_key="<DeepSeek API Key>", base_url="https://api.deepseek.com")# Round 1messages = [{"role": "user", "content": "9.11 and 9.8, which is greater?"}]response = client.chat.completions.create( model="deepseek-reasoner", messages=messages, stream=True)reasoning_content = ""content = ""for chunk in response: if chunk.choices[0].delta.reasoning_content: reasoning_content += chunk.choices[0].delta.reasoning_content elif chunk.choices[0].delta.content: content += chunk.choices[0].delta.content else: passprint(f'round1 content: {content}')# Round 2messages.append({"role": "assistant", "content": content})messages.append({'role': 'user', 'content': "How many Rs are there in the word 'strawberry'?"})response = client.chat.completions.create( model="deepseek-reasoner", messages=messages, stream=True)reasoning_content = ""content = ""for chunk in response: if chunk.choices[0].delta.reasoning_content: reasoning_content += chunk.choices[0].delta.reasoning_content elif chunk.choices[0].delta.content: content += chunk.choices[0].delta.content else: passprint(f'round2 content: {content}')结果截图

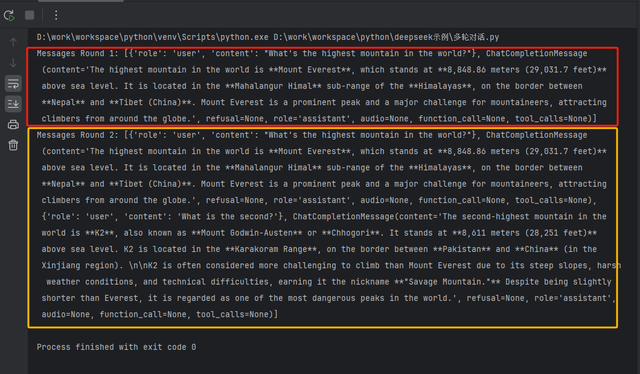

Python 流式调用示例源码示例from openai import OpenAIclient = OpenAI(api_key="<DeepSeek API Key>", base_url="https://api.deepseek.com")# Round 1messages = [{"role": "user", "content": "9.11 and 9.8, which is greater?"}]response = client.chat.completions.create( model="deepseek-reasoner", messages=messages, stream=True)reasoning_content = ""content = ""for chunk in response: if chunk.choices[0].delta.reasoning_content: reasoning_content += chunk.choices[0].delta.reasoning_content elif chunk.choices[0].delta.content: content += chunk.choices[0].delta.content else: passprint(f'round1 content: {content}')# Round 2messages.append({"role": "assistant", "content": content})messages.append({'role': 'user', 'content': "How many Rs are there in the word 'strawberry'?"})response = client.chat.completions.create( model="deepseek-reasoner", messages=messages, stream=True)reasoning_content = ""content = ""for chunk in response: if chunk.choices[0].delta.reasoning_content: reasoning_content += chunk.choices[0].delta.reasoning_content elif chunk.choices[0].delta.content: content += chunk.choices[0].delta.content else: passprint(f'round2 content: {content}')结果截图 Python 调用多轮对话示例Deepseek的 chat/completions API是一个无状态API, 即服务端不记录用户请求的上下文,用户每次请求时,需将之前所有对话历史拼接好后,传递给对话API源码示例from openai import OpenAIclient = OpenAI(api_key="<DeepSeek API Key>", base_url="https://api.deepseek.com")# Round 1messages = [{"role": "user", "content": "What's the highest mountain in the world?"}]response = client.chat.completions.create( model="deepseek-chat", messages=messages)messages.append(response.choices[0].message)print(f"Messages Round 1: {messages}")# Round 2messages.append({"role": "user", "content": "What is the second?"})response = client.chat.completions.create( model="deepseek-chat", messages=messages)messages.append(response.choices[0].message)print(f"Messages Round 2: {messages}")结果截图

Python 调用多轮对话示例Deepseek的 chat/completions API是一个无状态API, 即服务端不记录用户请求的上下文,用户每次请求时,需将之前所有对话历史拼接好后,传递给对话API源码示例from openai import OpenAIclient = OpenAI(api_key="<DeepSeek API Key>", base_url="https://api.deepseek.com")# Round 1messages = [{"role": "user", "content": "What's the highest mountain in the world?"}]response = client.chat.completions.create( model="deepseek-chat", messages=messages)messages.append(response.choices[0].message)print(f"Messages Round 1: {messages}")# Round 2messages.append({"role": "user", "content": "What is the second?"})response = client.chat.completions.create( model="deepseek-chat", messages=messages)messages.append(response.choices[0].message)print(f"Messages Round 2: {messages}")结果截图 在第一轮请求时,传北给API的messages为[ {"role": "user", "content": "What's the highest mountain in the world?"}]在第二轮请求时,需要第一轮模型的输出添加到messages末尾,还要将新的提问添加到messages末尾,最终传递给API的messages为[ {"role": "user", "content": "What's the highest mountain in the world?"}, {"role": "assistant", "content": "The highest mountain in the world is Mount Everest."}, {"role": "user", "content": "What is the second?"}]Json 格式输出

在第一轮请求时,传北给API的messages为[ {"role": "user", "content": "What's the highest mountain in the world?"}]在第二轮请求时,需要第一轮模型的输出添加到messages末尾,还要将新的提问添加到messages末尾,最终传递给API的messages为[ {"role": "user", "content": "What's the highest mountain in the world?"}, {"role": "assistant", "content": "The highest mountain in the world is Mount Everest."}, {"role": "user", "content": "What is the second?"}]Json 格式输出注意事项

设置 response_format 参数为 {'type': 'json_object'}用户传入的 system 或 user prompt 中必须含有 json 字样,并给出希望模型输出的 JSON 格式的样例,以指导模型来输出合法 JSON需要合理设置 max_tokens 参数,防止 JSON 字符串被中途截断在使用 JSON Output 功能时,API 有概率会返回空的 content。我们正在积极优化该问题,您可以尝试修改 prompt 以缓解此类问题源码示例import jsonfrom openai import OpenAIclient = OpenAI( api_key="<your api key>", base_url="https://api.deepseek.com",)system_prompt = """The user will provide some exam text. Please parse the "question" and "answer" and output them in JSON format. EXAMPLE INPUT: Which is the highest mountain in the world? Mount Everest.EXAMPLE JSON OUTPUT:{ "question": "Which is the highest mountain in the world?", "answer": "Mount Everest"}"""user_prompt = "Which is the longest river in the world? The Nile River."messages = [{"role": "system", "content": system_prompt}, {"role": "user", "content": user_prompt}]response = client.chat.completions.create( model="deepseek-chat", messages=messages, response_format={ 'type': 'json_object' })print(json.loads(response.choices[0].message.content))结果截图 自定义方法调用

自定义方法调用当前版本的Function Calling功能不稳定,暂时先不做演示